Finding and deleting large files in a git repository

Large files can slow down cloning and fetching operations, and make your developers less efficient. Use this guide to find and delete those files

Why is it important to have small git repositories?

GitHub recommends that git repositories be < 1 GiB is size. The most important reasons why I think this matters:

- It takes longer to clone and fetch larger git repositories. This may impact the time for Continuous Integration (CI) jobs to run, also it may impact the speed of deployments to your hosting infrastructure. It will definitely slow down developers looking to get setup for the first time.

- It adds to space needed to house the git repository. Both GitHub and GitLab will reach out to you if you are storing too much data. Not to mention the lost GB on your laptop.

Find the current size of your git repo

There is a command built in to git (since 2013) that does this very easily:

$ git count-objects -vH

count: 0

size: 0 bytes

in-pack: 4024

packs: 1

size-pack: 4.27 GiB

prune-packable: 0

garbage: 0

size-garbage: 0 bytesFind the large files in your git repository

Now that you know your git repository is excessively large, the next step is to work out why.

Using this amazing script on stackoverflow:

git rev-list --objects --all |

git cat-file --batch-check='%(objecttype) %(objectname) %(objectsize) %(rest)' |

sed -n 's/^blob //p' |

sort --numeric-sort --key=2 |

cut -c 1-12,41- |

$(command -v gnumfmt || echo numfmt) --field=2 --to=iec-i --suffix=B --padding=7 --round=nearestN.B. on Mac OSX, you will need brew install coreutils for the magic number formatting to work.

This script will list every single file in your git repository, with the largest files at the bottom, in the example git repository I was looking into, these were the largest files:

26aa9bdfd920 564KiB themes/site/hypejs/hypejs.css

1d8f89f0d18a 564KiB themes/site/hypejs/hypejs.css

cf4d7ceb1d4f 684KiB themes/site/css/fonts/fontawesome/fa-brands-400 2.svg

401b7f7b3219 823KiB themes/site/css/fonts/fontawesome/fa-solid-900 2.svg

989b349bb493 4.3GiB files.zipYou can spot a single large zip file files.zip in the repository, coming in at a whooping 4.3GiB. In this particular case, this was due to a developer accidentally committing the file in one commit, and then thinking they can remove it by deleting it in the next. Git however, remembers data in all commits for all time.

So how do we actually properly delete files.zip and reclaim that space?

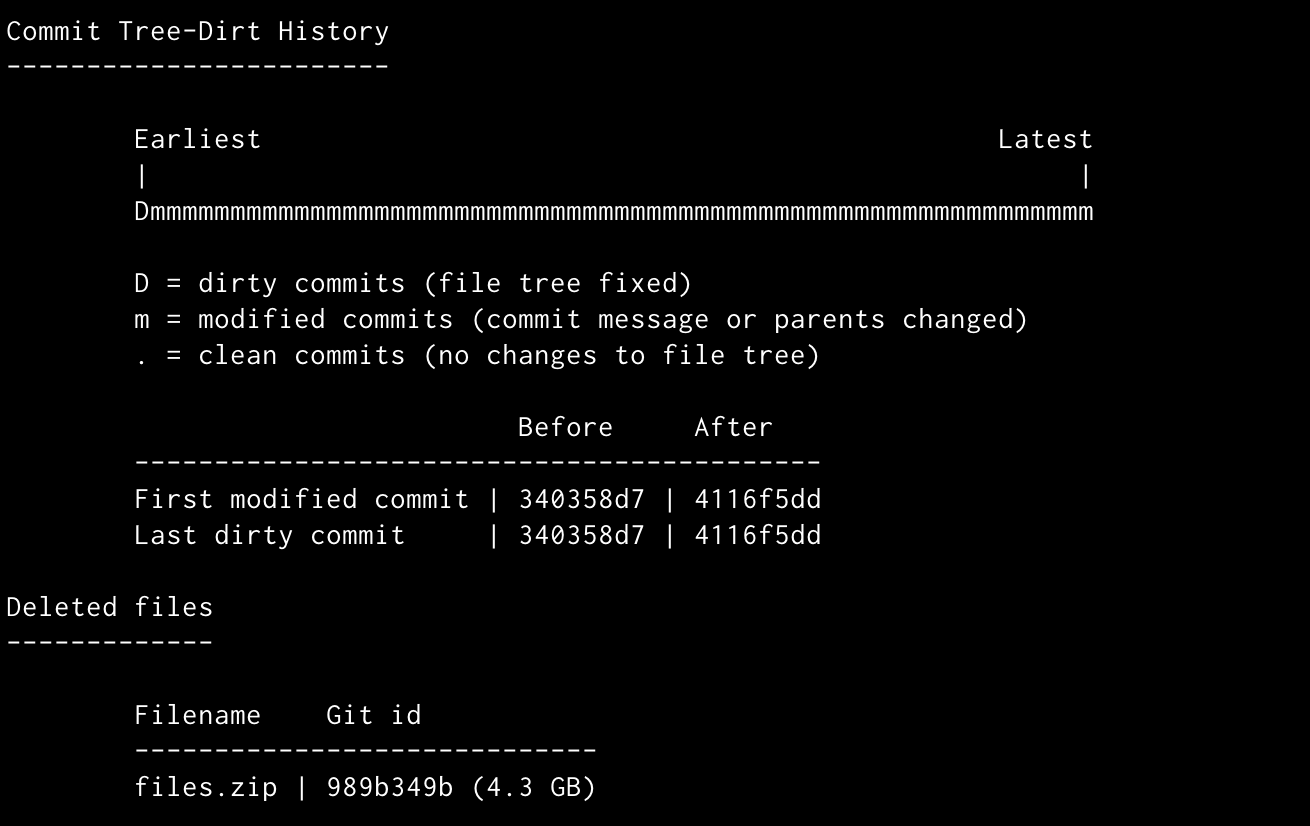

Remove the large files by rewriting git history - using the BFG repo cleaner

The BFG repo cleaner is an excellent example of a tool to make complex git operations more approachable for the average punter. I use docker to run it, but if you have java installed locally, then running it natively might be easier for you.

docker run -it --rm \

--volume "$PWD:/home/bfg/workspace" \

koenrh/bfg \

--strip-blobs-bigger-than 100MThe output is amazing from the BFG repo cleaner as well, there is a report supplied showing the files it removed, and which commits needed to be altered.

You also need to run git garbage collection to reclaim the space

git reflog expire --expire=now --all && git gc --prune=now --aggressivegit gc command to strip out the unwanted dirty dataAfter you are all done, re-run the command to find the new size

$ git count-objects -vH

count: 0

size: 0 bytes

in-pack: 4024

packs: 1

size-pack: 8.29 MiB

prune-packable: 0

garbage: 0

size-garbage: 0 bytesIf you are happy then force push over your remote

git pushN.B. all rewritten commits will have a different SHA, and all other developers using the same repo will need to do steps to ensure they have the most recent version of the git repo.

git fetch origin

git reset --hard origin/masterHope this helps somebody out there